Lawmakers and tech groups fight back against deepfakes

Simply sign up to the Cyber Security myFT Digest -- delivered directly to your inbox.

Tech giants, cyber security groups and governments are stepping up the battle against deepfakes, which use artificial intelligence to produce lifelike video and audio of real people doing unreal things.

Billed as the next chapter in the fake news era, synthetic media can be used for many malicious purposes, from false presidential threats of war to a faked call from your chief executive requesting a wire transfer.

“There is a broad attack surface here — not just military and political but also insurance, law enforcement and commerce,” says Matt Turek, programme manager for MediFor, a media forensics research program led by the Defense Advanced Research Projects Agency, part of the US defence department.

Image manipulation is nothing new. In 1865, a portrait painter put together two images to give President Abraham Lincoln a more heroic posture, while Soviet leader Joseph Stalin employed an army of retouchers whose tasks included removing from official photographs individuals who fell out of favour.

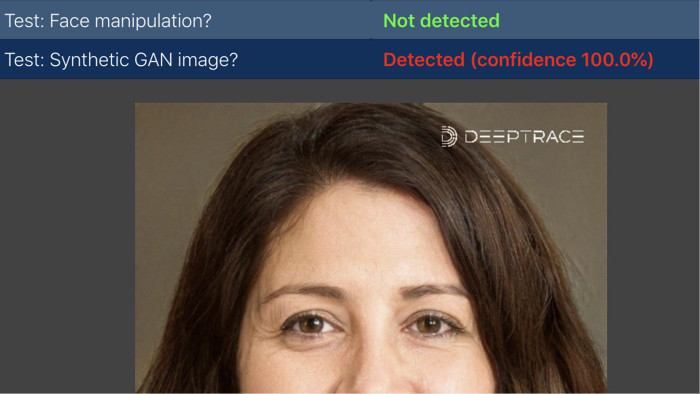

Today, three technological shifts have taken media manipulation to new levels: the development of large image databases, the computing power of graphics processing units (GPUs), and neural networks, an AI technique. The number of deepfake videos published online has doubled in the past nine months to almost 15,000 according to DeepTrace, a Netherlands-based cyber security group.

In an effort to fight back against deepfakes, academic experts and tech companies are looking for ways to detect and remove such content from the internet. Google has released deepfake samples to help researchers build detection technologies, while Facebook and Microsoft have teamed up with universities to offer awards to researchers who develop ways of spotting and preventing the spread of manipulated media.

Such giveaways include low-level pixilation patterns that a machine learning algorithm could spot, says Mr Turek, as well as inconsistencies in light, shadow and geometry. Factual analysis can also be performed, such as comparing outside images or video with data about weather or the sun angle at the time. However, researchers acknowledge that malicious actors will evolve their tactics, leading to a cat-and-mouse game.

Lawmakers are also stepping into the fray, with a growing number of bills introduced in US Congress and state legislatures. Texas and California have criminalised the use of deepfakes that interfere with elections and Virginia has banned the use of deepfake technology to make non-consensual pornography, the dominant use-case. A report from New York University called deepfakes a “credible threat” to the US 2020 elections.

But there are challenges with response efforts, including whether the successful evasion of detection technology would legitimise a deepfake.

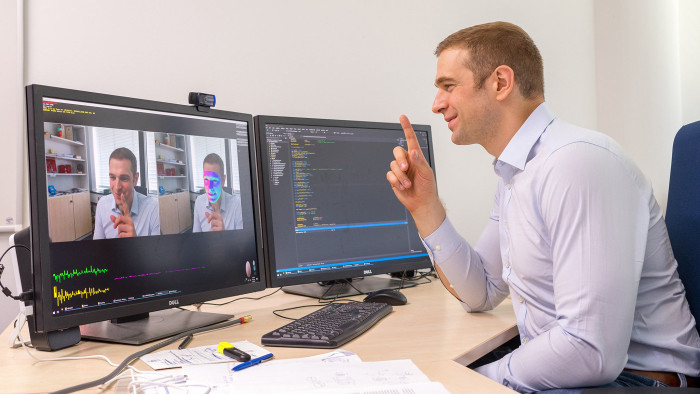

“If Google or Facebook puts out a deepfake technology and it works in 95 per cent of the time, that would be . . . better than what we have now,” says Matthias Niessner, professor at the Technical University of Munich who is working on FaceForensics, a data set for developing forgery detection tools. “But if it doesn’t work at a certain point, they would have a huge problem. If I was a tech company, I would have concerns putting out anything that doesn’t work 100 per cent of the time.”

Detection tools also cannot solve the “liar’s dividend” problem in which an individual is genuinely caught saying or doing something compromising and dismisses the evidence as a fake, since it is difficult to prove that something is categorically not fake.

Nonetheless, dystopian fears of world wars and election-tilting from deepfake videos are overblown, says Henry Ajder, head of research at DeepTrace, and the idea that more people can produce realistic videos — increasing the likelihood of malicious actors — is also not credible.

“The technology, as much as it is becoming more realistic and efficient, is not at a level at which any person in the street is going to be 100 per cent convinced,” he says.

Deepfakes should also be understood as reflecting a social problem rather than just a technical one, according to experts. “Deepfakes are most likely to work in places with polarisation and hyper-partisan communities because it will give a community that really wants to espouse a certain point of view the proof they are looking for,” says Ben Nimmo, head of investigations at Graphika, a social media analytics company.

A doctored “shallow fake” video of Nancy Pelosi, which was slowed down to make the speaker of the US House of Representatives appear drunk, did not fool mainstream media, but that was not the intention of its makers, says Mr Ajder. Instead, it was “spread on Facebook and Twitter into echo chambers in which people aren’t looking to critically evaluate the media they consume. They are looking for anything that confirms their existing biases and beliefs.”

A further detection challenge is that deepfakes can be damaging in the gap between when they are published and when they are flagged.

A recent report from business intelligence and investigations group Kroll, which found that 84 per cent of surveyed business leaders feel threatened by “digital hearsay”, cited the case of an ecommerce group that lost 71 per cent of its market value after false reports of its accounts circulated among traders via WhatsApp.

One bright spot, say experts, is the blooming of deepfakes themselves, which are sensitising the public to their existence. Deepfakes can also be a force for innovation, from lip-syncing and movie production through to health.

A Canadian start-up developed personalised synthetic voices for sufferers of motor neurone disease who eventually lose the ability to speak. Massachusetts Institute of Technology and Unicef, the UN agency for children, created synthetic images of how London and Tokyo might look if they were bombed, to foster empathy with those fleeing war.

“Rising public awareness about deepfakes raises the bar on an adversary, in terms of what they need to do to create a compelling manipulation,” says Mr Turek.

Comments